We stand at a turning point in API design. The emergence of reasoning models like DeepSeek has transformed how we think about who - or what - consumes our APIs. While we once designed APIs with human developers in mind, the rise of agentic AI forces us to reconsider this approach.

Let me share what keeps me up at night: our APIs remain stuck in a human-first paradigm while AI agents become their primary consumers. These aren't simple scraping bots or automation scripts. Modern AI agents can plan, reason, and adapt their behaviour. They interact with APIs in ways we never imagined when we wrote our OpenAPI specifications and documentation.

Consider how AI agents approach APIs compared to human developers. Where a human reads documentation and experiments through trial and error, an AI agent processes the entire API surface in seconds. It can generate thousands of potential interaction patterns and test them systematically. This fundamental difference in consumption patterns demands a shift in how we design and secure our APIs.

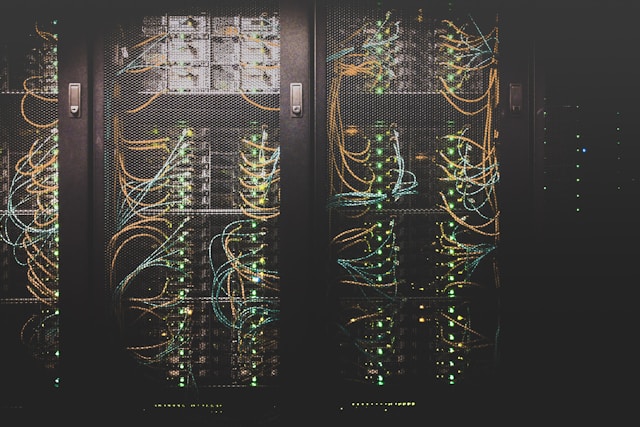

The implications stretch beyond technical specifications. When I examine API logs these days, I see traffic patterns that challenge our traditional assumptions. AI agents don't follow the typical "business hours" usage patterns. They don't need rate limiting for cognitive overload. They process responses at machine speed and chain API calls in complex ways human developers rarely attempt.

This shift forces us to rethink several core aspects of API design:

Structure and Format

We must move beyond human-readable formats to machine-optimised structures. JSON and REST endpoints work for humans who need to read and understand responses. But for AI agents, we could design more efficient formats that optimise for machine processing rather than human comprehension.

Rate Limiting and Quotas

Our current rate limiting models assume human consumption patterns. AI agents operate at machine speed and scale. We need new models that account for the processing capabilities of AI while preventing abuse. This might mean shifting from simple request counts to complexity-based quotas.

Authentication and Security

Traditional API keys and OAuth flows centre on human developers. AI agents need different security models that account for their unique characteristics. We must consider how to verify the identity and intentions of AI agents while maintaining security.

Documentation and Discovery

API documentation today focuses on human understanding. For AI agents, we need machine-readable specifications that go beyond OpenAPI. These specifications must include semantic information about what endpoints do, not just how to call them.

The shift impacts how we monitor and maintain APIs too. Traditional metrics like response time and error rates remain relevant, but we need new metrics for AI agent behaviour. How do we measure the "success" of an API when its primary users are machines that can adapt to and work around problems?

Performance optimisation takes on new dimensions when designing for AI. While human developers might tolerate occasional latency, AI agents can make thousands of calls per second. This demands new approaches to caching, edge computing, and response optimisation.

Looking ahead, I see APIs evolving into two parallel tracks: human-oriented interfaces that prioritise developer experience, and machine-oriented interfaces optimised for AI consumption. This isn't about choosing one over the other - it's about recognising that these users have fundamentally different needs.

The challenges extend to business models too. How do we price APIs when the consumers are AI agents that can process information at machine scale? Traditional per-request pricing might not make sense when an AI can make millions of optimised calls that would take a human developer years to replicate.

The rise of residential proxies adds another layer of complexity. These proxies allow AI agents to appear as regular users, making it harder to distinguish between human and machine traffic. This forces us to move beyond IP-based rate limiting and develop more sophisticated ways to manage API access.

We must also consider the ethical implications. As APIs become primarily consumed by AI agents, we need frameworks to ensure responsible use. This includes considering how our APIs might be used in AI systems and what guardrails we need to implement.

The future I see isn't about replacing human developers - it's about acknowledging that AI agents represent a new class of API consumers with unique needs and capabilities. This requires us to evolve our thinking about API design, security, and management.

The APIs we build today will form the foundation for tomorrow's AI-driven systems. We have a responsibility to design them thoughtfully, considering both human and AI consumers. This means moving beyond our current paradigms and embracing new approaches that serve both audiences effectively.

The shift to AI-first API design isn't coming - it's here. The question isn't whether to adapt, but how quickly we can evolve our practices to meet this new reality. Those who embrace this change will shape the future of how machines and humans interact through APIs.

Our APIs must evolve. The future depends on it.