The internet is filled with bots. In fact, recent studies estimate that nearly 50% of all internet traffic is generated by these automated programs. Bots come in various forms, some good, like search engine crawlers that index your site, some bad, like scrapers and sneaker bots, and some that fall into a grey area, like backlink and marketing bots such as Ahrefs and SEMrush. While bots can serve a useful purpose, they can also cause problems when they crawl websites too aggressively. In this blog post, we'll explore the different types of bots, and how you can manage them using robots.txt and bot management tools.

Understanding the Different Types of Bots

'Good Bots'

Good bots are essential to the smooth functioning of the internet. Search engine crawlers like Googlebot and Bingbot help index webpages, ensuring that search engine results are up-to-date and relevant. Other examples of good bots include uptime, and performance monitoring bots.

'Bad Bots'

Bad bots are harmful to websites and their users. They engage in malicious activities such as:

- Scraping content, which involves copying and repurposing data from websites.

- Sneaker bots, which automatically purchase limited-edition products (like sneakers) before human users can.

- Spam bots, which post unsolicited messages and advertisements in comment sections or forums.

- Vulnerability Scanners, which try thousands of combinations of website URLS to try and find security vulnerabilities.

- Account Takeover, which attempt to gain access to existing user/admin accounts using either credential stuffing or brute-force attacks.

'Grey Bots'

Grey bots fall somewhere in between good and bad. They often serve a useful purpose and will also listen to directives about their crawling behaviour from the robots.txt file, but can cause problems when they crawl websites too aggressively. Common examples include:

- AhrefsBot: A backlink analysis bot used by Ahrefs, an SEO tool.

- SEMrushBot: A bot used by SEMrush, another popular SEO and digital marketing tool.

- MJ12bot: A bot used by Majestic, a service that provides backlink data and analysis.

- ScreamingFrog: An seo analyzer run from a local desktop.

When Grey bots (and even Good Bots) go bad.

Left unattended and unmanaged, grey bots can lead to a variety of issues, such as:

- Slow page loading times, impacting user experience.

- Strain on server resources, potentially causing crashes or downtime, and costing you money!

- Distorted website analytics, as bot traffic is mistaken for human traffic.

Managing Grey Bots with Robots.txt

The robots.txt file is a simple text file that tells web crawlers which parts of your site they can or cannot access. You can use this file to manage bot behavior and protect your website from aggressive crawling. Some ways to manage grey bots with robots.txt include:

Disallowing specific bots: You can block specific bots from accessing your site by adding a "User-agent" and "Disallow" directive to your robots.txt file. For example:

User-agent: AhrefsBot

Disallow: /

Limiting crawl rate: You can request that bots slow down their crawling by adding a "Crawl-delay" directive:

User-agent: SEMrushBot

Crawl-delay: 10

Not all bots will listen to the robots.txt, ScreamingFrog for example can be instructed to ignore robots.txt so it crawls a site as quickly as possible. Naturally you wouldn't want your competitor doing this to your site!

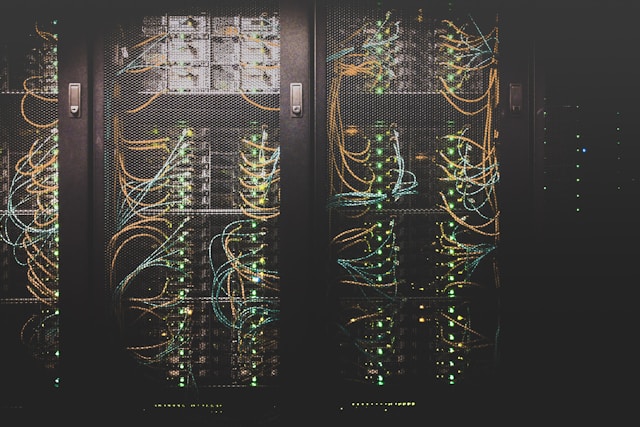

Bot Management Tools

In addition to robots.txt, bot management tools (like those provided by Peakhour) can protect your website from abusive bots. Good bot management tools allow you to automatically block the majority of unwanted traffic using a combination of Threat Intelligence, Fingerprinting techniques, Reverse DNS verification, and Header Inspection.

Advanced techniques like rate limiting and machine learning can help weed out the most sophisticated bad bots.

Search Bots and Double Crawling

Search bots like Bingbot can sometimes blindly follow links and crawl the same page multiple times due to different URL parameters. This double, triple, or worse... crawling can lead to increased server load and inefficient indexing of your website's content. This can be especially problematic on eCommerce sites that have many ways of filtering their catalogue of products. We've seen Bing go haywire on a number of sites, most recently it was issuing around 50,000 requests per day to the search function of a Magento 2 store, cycling through parameters. This dropped to 2-3k requests per day when fixed. On another store Bing was responsible for nearly half of all page requests (40k page requests) on a busy opencart store, configuring it to ignore parameters dropped this down to around 4k per day.

Configuring Search Bots to Ignore Query Parameters

Note: Since publishing both Google and Bing have removed the ability to ignore parameters when crawling via their webmaster/search console tools. See using robots.txt to instruct search engines to ignore query string parameters

To ensure that search bots crawl your site efficiently you can configure search bots to ignore specific query parameters, you can use the following methods:

Configuring Bing Webmaster Tools

Bing Webmaster Tools provides an option to specify URL parameters that should be ignored during the crawling process. To configure this setting, follow these steps:

- Sign in to your Bing Webmaster Tools account and select the website you want to manage.

- Navigate to the "Configure My Site" section and click on "URL Parameters."

- Click on "Add Parameter" and enter the parameter name you want Bingbot to ignore.

- Select "Ignore this parameter" from the dropdown menu and click on "Save."

- By configuring Bing Webmaster Tools, you can ensure that Bingbot will not double crawl pages with specific URL parameters, reducing server load and improving the efficiency of the indexing process.

Managing Other Search Bots

For other search engines like Google, you can use their respective webmaster tools to manage URL parameters. In Google Search Console, follow these steps:

- Sign in to your Google Search Console account and select the property you want to manage.

- Navigate to the "Crawl" section and click on "URL Parameters."

- Click on "Add Parameter" and enter the parameter name you want Googlebot to ignore.

- Choose "No URLs" from the "Does this parameter change page content seen by the user?" dropdown menu.

-

Click on "Save."

-

By specifying the parameters you want search bots to ignore, you can prevent double crawling and ensure a more efficient indexing process for your website.

Final Thoughts

When good, or grey, bots crawl too aggressively, they can unintentionally cause problems similar to those caused by malicious bots, including overloading servers, slowing down websites, and negatively impacting user experience. Monitoring traffic to your website and server load, implementing thoughtful rules in your robots.txt, and properly using the webmaster tools of the major search engines to ensure efficient crawling, can have a significant impact on your website performance and lowering your infrastructure costs.