The trend is unmistakable - AI agents now craft exploits by analysing security responses in real-time. This marks the death of static security rules and traditional Web Application Firewalls (WAFs). Let me explain why.

I examined an AI agent probing a test environment last week. It sent requests, observed the responses, and systematically developed bypasses for each security control. The agent identified pattern-based rules, learned their structure, and generated variations until it found gaps. No human intervention required.

This automated exploit development changes the security landscape. Traditional defences rely on known patterns - regex rules, signature matching, IP reputation. These approaches assume threats follow recognisable templates. That assumption no longer holds.

Consider a standard WAF rule blocking SQL injection through pattern matching. An AI agent examines the responses, determines the matching patterns, then generates unique variants designed to bypass those rules while maintaining the exploit's functionality. The variants evolve as the agent learns which approaches succeed.

The implications extend beyond SQL injection. AI agents apply this systematic probing to every security control - XSS filters, access controls, input validation. Each static rule becomes a puzzle for the AI to solve through iterative testing and bypass generation.

By 2026, I estimate AI agents will drive over 50% of exploit attempts. The speed of this shift stems from three factors:

- AI agents operate continuously, testing and learning 24/7

- Successful exploits feed back into training data, improving future attempts

- Agents share knowledge, building collective intelligence about bypass techniques

This marks the end of static security. Traditional WAFs, which rely on fixed rules and signatures, cannot keep pace with AI-generated exploits. Each rule becomes outdated as agents discover new bypasses.

The path forward requires a fundamental shift in security architecture. Organisations must move to context-aware systems that analyse intent rather than patterns. These systems use behavioural AI to distinguish between legitimate requests and exploit attempts, regardless of their specific structure.

Key elements of this new approach include:

- Intent analysis through deep inspection of request sequences

- Behavioural modelling of normal vs malicious patterns

- Real-time adaptation as new exploit techniques emerge

- Proactive identification of potential vulnerabilities

- Integration of threat intelligence across systems

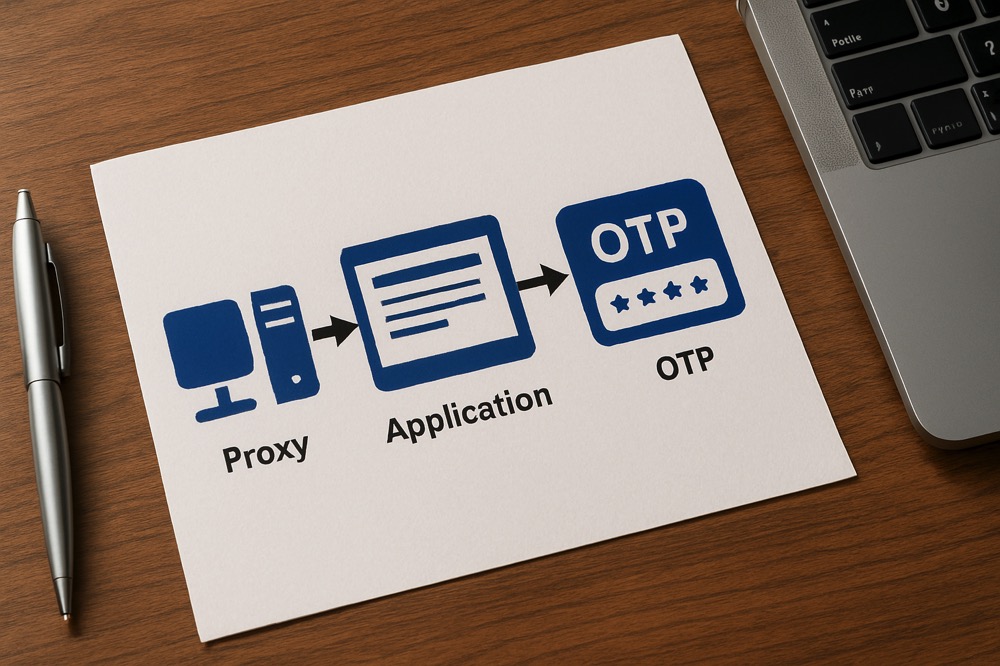

The challenge intensifies when AI agents leverage residential proxies. These proxies route traffic through real consumer IP addresses, bypassing location-based blocks. An AI agent operating through residential proxies can probe defences while appearing to come from legitimate users worldwide.

This combination - AI-driven exploit generation plus residential proxy networks - renders traditional security controls obsolete. Organisations continuing to rely on static rules face inevitable compromise.

The time to act is now. Security teams must:

- Audit existing WAF rules to identify pattern-based weaknesses

- Deploy behavioural analysis capabilities to detect malicious intent

- Implement adaptive security controls that evolve with threats

- Monitor for AI-driven probing attempts

- Build detection for residential proxy traffic

Those who wait risk finding their defences systematically dismantled by AI agents. The security landscape has shifted, and static rules cannot hold back this tide of automated exploitation.

This requires a mental shift in how we approach security. Rather than trying to block specific patterns, we must focus on understanding and controlling the broader context of system interactions. The goal moves from "preventing known attacks" to "identifying and blocking malicious behaviour, regardless of its specific form."

The future belongs to adaptive security systems that think like the AI agents probing them. Static rules are dead. The age of intelligent, context-aware defence has begun.

Your security strategy must evolve now. The AI agents are already at work.

The Reasoning Model Revolution

The emergence of open reasoning models like DeepSeek transforms this threat landscape further. Unlike traditional AI that follows programmed patterns, reasoning models understand context and adapt strategies dynamically. This capability creates unprecedented security challenges.

Consider how a reasoning model approaches security testing. Rather than simply probing for weaknesses, it builds a conceptual model of the system's defences. It understands the purpose of security controls and reasons about potential bypasses. This enables it to generate novel attack strategies never seen in training data.

The impact of DeepSeek demonstrates this shift. Within months of release, it showed capabilities matching established players at a fraction of the cost. This rapid progress stems from reasoning models' ability to understand and adapt rather than simply pattern match.

For security teams, this creates a critical challenge. Reasoning models don't just find gaps in rules - they understand why rules exist and devise creative bypasses. They analyse response patterns, deduce the logic behind security controls, and generate attacks that exploit fundamental assumptions.

By 2027, I expect reasoning models to handle most security testing and exploit development. Their advantages prove too compelling:

- They understand system architecture and security principles

- They generate novel attack strategies through reasoning

- They adapt in real-time based on system responses

- They share and build upon successful approaches

This shift makes traditional security approaches obsolete faster than many realise. Pattern matching and rule-based systems cannot counter an opponent that understands and reasons about their fundamental principles.

The combination of reasoning models with residential proxies creates perfect storm conditions. Reasoning models devise sophisticated attacks while proxies mask their origin. Each successful breach feeds back into the model's understanding, improving future attempts.

Security teams must embrace a new paradigm focused on:

- Understanding attack narratives rather than patterns

- Detecting anomalous reasoning rather than known signatures

- Building systems that adapt to novel attack strategies

- Implementing security that reasons about intent

- Developing defences that evolve through adversarial learning

The future demands security systems that can reason about threats as effectively as the AI agents probing them. Traditional approaches will fail against opponents that understand the logic behind security controls and devise creative bypasses.

The age of reasoning security has begun. Those who adapt will survive. Those who cling to static rules and pattern matching face extinction.

The question isn't whether to evolve, but how quickly you can embrace this new reality.