Today's sophisticated bad bots often circumvent traditional security countermeasures. They disrupt and damage websites, mobile applications, and APIs. Malicious bot tactics include scraping user and pricing data, creating fake accounts, conducting advertising click fraud, exhausting online inventories, and taking websites offline completely with automated DDoS attacks.

About one-quarter of all website traffic in 2019 originated from bad bots, an increase of 18 percent over 2018. Advanced persistent bots (APBs) made up seventy-five percent of that bad bot traffic as they attempted to evade detection by cycling through random IP addresses, using anonymous/residential proxies, and changing their identities (user agent). The top industries in 2019 hardest hit by bad bots included financial services, education, ecommerce, and government as well as media and airlines.

“Bot attack campaigns have become big business for threat actors, and major organizations are now fighting to support legitimate users and prospects while keeping attackers out of online applications and services,” says Paula Musich, Research Director, Enterprise Management Associates.

Bots have evolved over the years, from simple scripts into sophisticated networks of distributed agents that can mimic human interactions with machine learning techniques. They can avoid detection by network security technologies that have not kept pace with these new, sophisticated automated agents.

Mitigating the damage from bad bots and staying ahead of evolving threats necessitates that organizations deploy an array of sophisticated security countermeasures to not only detect bad bots but also render them harmless from an economic perspective.

Bot Countermeasure Best Practices:

The following bad bot countermeasures best practices range from network security to machine learning and behavioral analysis that help to reduce the economic harm that malicious bots inflict on businesses and end-users.

Web Application Firewalls

Web Application Firewalls (WAF) are a common yet essential first line of defense that filter out harmful Layer 7 web application (HTTP) traffic using rules or policies that protect organizations against Distributed Denial of Service (DDos) bot attacks. WAFs also protect against cross-site forgery, cross-site-scripting (XSS), file inclusion, and SQL injection attacks. A WAF is considered a reverse proxy that protects servers and can be deployed as an appliance, server plug‑in, or filter, and customized by application type or use case. WAF rules are also flexible and can be updated or changed based on the type of bot attack.

IP Tracking and Reputation

Sophisticated bots can be detected using network forensics by inspecting received and requested web traffic and assessing whether the requests are from actual users versus bad bots. Requests can be analyzed from data sources including Tor/proxy IPs, IP addresses, IP geo-location information, ISP information, and IP owners. Additional sources for real-time and near-time malicious IP threat data needed to block attacks comes from network data, CERTs, MITRE and cooperative competitors.

Client/Device Fingerprinting

Fingerprinting attempts to identify devices ranging from PCs, Internet of Things (IOT) or mobile devices and servers using data attributes that create real-time risk profiles to stop bot attacks. Using web page access data, unique fingerprints for each end-user device are generated by a bot detection fingerprinting engine against bad bots that use evasion techniques including dynamic IP addresses and anonymous web proxies.

Machine Learning

Artificial Intelligence (AI) and machine learning algorithms are increasingly being used to analyze and make recommendations regarding malicious bot mitigation using data from sources such as user activity history, behavioral patterns and meta-data. The benefits of using machine learning methodologies to detect bad bots are the use of custom tailored algorithms that can be deployed to target bots and iteratively process user data and identities for discerning emerging bot attack patterns from very large amounts of real-time information.

Tarpitting

Tarpitting is a bot countermeasure that delays and slows down incoming malicious traffic from suspect connections. The technique is used to increase bot attack financial and resource costs in an attempt to discourage malicious actors. Bad bot tar pits can delay bot request responses or have the bad bot IP address attack source taken offline completely. Innovative tarpitting techniques include requiring bad bots to solve computationally complex math challenges to access resources or web sites thereby slowing down or stopping bot activity.

User Behavior Analysis

User interaction behavior attributes and identifying characteristics on a web page or mobile app is different from the behavior of an automated malicious bot. Factors such as number of pages visited per session, time spent on each web page or within a mobile app and repeat visit frequency all help to differentiate authentic users versus bad bots. Defeating bad bots using Behavior Analysis involves creating a user model for individual sites using historical visitor data and checking for anomalies that may indicate bad bot activity.

Intent-based Deep Behavior Analysis (IDBA)

As opposed to Behavior Analysis, Intent-based Deep Behavior Analysis (IDBA) is a next-generation technique that conducts behavioral analysis at the user intent level versus the commonly used interaction-based behavior analysis. IDBA consists of intent encoding, intent analysis, and adaptive learning. It also employs machine learning techniques to detect bad bots emulating on-site human behavior interactions. Bad bot mitigation techniques include the limiting of login page attempts, web authentication pages and API call authentication pages.

Rate Limiting

Rate Limiting mitigates bad bots and DDoS attacks by restricting the amount of incoming traffic received for specific applications and API endpoints using pre-defined bandwidth limitation policies. Web applications, GET versus POST requests, APIs that receive queries, and login credentials all can be blocked if clients, IP or IP and user-agent pairs violate Rate Limiting rules. Intellectual property scraping can also be protected by Rate Limiting policies that restrict repeated mage or digital downloads.

Javascript Injection

Using JavaScript Injection techniques can help mitigate bad bot attacks in several ways. Scripts can be placed into web applications that “fingerprint” a user’s browser to distinguish humans versus bad bots emulating “human-like” mouse movements, keystrokes or clicks. Fingerprinting detection may also involve user agent identification, HTML5 canvas and audio fingerprinting, and protocol level fingerprinting with TLS and HTTP2. JavaScript combined with browser Cookies can also be used to identify anomalous behavior from unwanted traffic or bad bots trending over time.

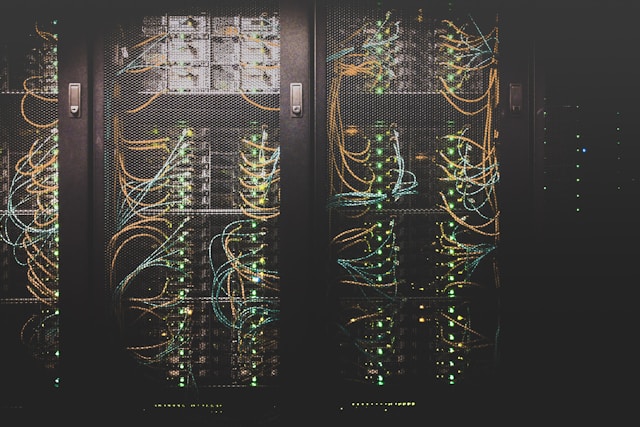

ANYCast DDoS Mitigation

Anycast is an IP addressing method that efficiently routes incoming traffic requests to the nearest location or “node.” Using ANYCast for selective routing enables network load resilience against DDoS attacks by routing high traffic across multiple servers and data centers. This prevents network resources from becoming overwhelmed with malicious or irrelevant traffic.

Alternative Content Serving

Serving Alternate and Cached Content when a bad bot is detected provides organizations with the ability to mislead bots but not block them altogether. For instance, e-commerce sites may fool price scraping bots by serving alternative web pages that look like legitimate pages but with higher prices. Serving Cached Content when a bot is detected also minimizes load servers and without affecting site performance.

Challenges

Requests from suspected bots can be redirected to Challenges or puzzles such as a CAPTCHA, also known as a Completely Automated Public Turing test that helps to identify a bad bot versus a human. Online puzzles, such as letter matching, are easy for humans to solve but difficult for automated bots. reCAPTCHA, offered free from Google, is an advanced version of CAPTCHA puzzles that require users to identify text from real-world images such as street address signs, printed books or text from paper newspapers.

Final Thoughts

Bad bots hijack user accounts, create fake accounts, scrape websites for data and personal information, flood websites with traffic automated distributed denial of service attacks and attack public facing APIs using constantly changing techniques. Bad bots hide behind dynamic IP addresses. They also change their attack signatures, mimic human behaviours, and take over vast networks of hosts and IoT devices creating zombie machines that distribute malware across the internet. Deploying an array of countermeasures ranging from Web Application Firewalls to sophisticated Machine Learning algorithms is an organization's critical primary line of defense against bad bots.